If you’re anything like me, you’ve probably heard the term quantum computing thrown around more lately.

But, what’s with all the hype around quantum computing?

Quantum computing harnesses quantum mechanicsal phenomena to perform computation, so before we go into the nitty gritty details of all quantum computing has to offer, there’s a few fundamental quantum mechanical concepts we need to understand. Let’s rewind a few decades and see how all this started:

First things first: Electrons are weird, and that weirdness is the foundation for all things quantum.

Electrons act like both a particle and a wave. Yes, the “and” is not a typo. Now what exactly does this mean? To better understand what this means, let’s take a look at the behavior of particles and waves through Thomas Young’s infamous Double Slit experiment!

Suppose you have two slits in a cardboard placed in front of a wall and you shoot some partcles through the slits, this is what you’d see on the wall:

Suppose you still have two slits in a cardboard placed in front of a wall but instead you send waves through the slits, this is what you’d see on the wall:

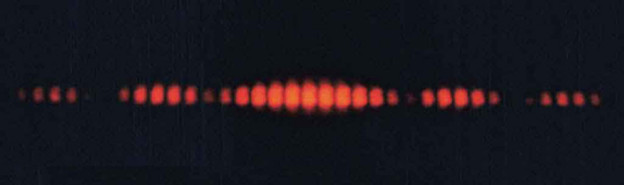

Now let’s suppose we send a beam of electrons through the two slits, this is what you’d see on the wall:

As you can see, the pattern created by sending electrons through the two slits resembles a mixture of both the particle and the wave, which is also known as Wave-Particle Duality. We’ll come back to this in a bit.

Next, in the early 20th century, the model of an atom was continuously being refined. Initially, there was J. J. Thomson’s plum pudding model, where all the electrons (negatively charged) were buried in a ball of positive charge — sort of like blueberries in a blueberry muffin.

However, Ernest Rutherford came along and performed a few experiments. Basically, he shot alpha-particles at a gold foil and saw that most of the particles actually went through rather than bouncing back. But according to Thomson’s model, this shouldn’t be possible; after all, how can many particles pass through a solid ball of positive charge? So Rutherford concluded most of the atom must be empty space and came up with the Rutherford atomic model where at the center was positively charged stuff (aka. the nucleus) and electrons around it — kind of like our Solar System.

While Rutherford’s model was an improvement, it didn’t provide any explanation as to why none of the electrons would spiral into the nucleus, which should occur according to classical physics. Turns out classical physics fails at the atomic level…

That’s where Neils Bohr and Erwin Schrodinger come in. Bohr declared that the electrons occupy discrete energy levels or orbits around the electron; the closer the electron is to the nucleus, the less energy the electron has. Electrons can also move from one energy level to another by absorbing or radiating energy. Schrodinger built off this thought by stating electrons don’t just occupy orbits, but rather, due to its wave-like nature, occupies regions in space known as orbitals, giving rise to the Electron Cloud Model or the Quantum Mechanical Model. The electrons can be anywhere within its region of space; there’s no way to predict the exact location. Instead, we can only find the probability than an electron is in a given region, which is elegantly represented by the Schrodinger wave equation. The Electron Cloud Model is the current accepted atomic model.

Wow. That was a lot of information, and by now, you may be wondering why electron’s weirdness, wave-particle duality, Schrodinger’s wave equation, and the electron cloud matters. It’s because not only does many quantum mechanical phenomena like superposition, entanglement, and measurement result from these concepts, but everything quantum computing related builds off of them. Of course, everything I talked about here is only a very small portion of the history of quantum mechanics, so feel free to look more into everything.

As a quick side note, here is a description of what superposition, entanglement, and measurement are:

- Superposition refers to how a quantum object can exist in multiple states at the same time due to its wave-like nature. Like electrons in an atom, we can never know what state the quantum object is in with certainty, so we express the possible states for the quantum object in superposiiton with probabilities.

- Entanglement refers to how the wave-like nature of quantum objects allows for the waves of various quantum objects to essentially add up and become expressed as only one wave. Whatever happens to one quantum object will affect all the other entangled quantum objects.

- Measurement refers to how when we observe a quantum object, it immediately collapses to one state from superposition. This means two things: we will never be able to observe a quantum object in superposition and the state that it collapses to is entirely probabilistic.

Now that you have a bit of an idea of how quantum mechanics came to be, let’s dive into the hype surrounding quantum computing.

One of the primary reasons there is so much hype surrounding quantum computing is the added computational power. The quantum bits — or qubits — in quantum computers utilize superposition, entanglement, and measurement, allowing them to run at incredibly fast speeds, store exponentially more information than classical computers, and perform calculations that even the worlds fastest supercomputer would take billions of years to solve. A quantum computer with only 50 qubits would easily outperform a traditional computer.

There are various potential applications of quantum computing: Cybersecurity, Drug Development, Artificial Intelligence/Machine Learning, Finance, etc.

Cybersecurity: Quantum computers are capable of factoring large numbers (Shor’s Algorithm) which poses as a threat to modern day RSA encryption, but companies are already working on post-quantum cryptography protocols to enhance cybersecurity. Some post-quantum cryptography protocols include Quantum Key Distribution.

Drug Development: Quantum computers has immense potential for molecular simulation such as modelling proteins. Nature is fundamentally quantum, so using quantum to simulate quantum objects like molecules could be very promising.

Artificial Intelligence/Machine Learning: Artificial intelligence and machine learning algorithms are already extremely powerful and able to handle large amounts of data, but the added computing power from quantum computers will speed up runtime and increase data storage which is highly favorable given that we are continually collecting more data.

Finance: Quantum computing has proven useful in financial modelling where financial markets can be seen as a quantum process.

Quantum computing is a rapidly growing field, and there’s definitely space for you no matter what your interests are!